Emotion recognition is a hot topic in the field of artificial intelligence. The most interesting areas of application of such technologies include: recognition of the driver’s condition – excited or calm, marketing research of the composition of the audience – men, women or children, noise assessment systems for smart cities, assessment of the presence of people, monitoring the status of students in schools and universities – working or excited , assessment of the noise level in classrooms, control of extraneous noise in enclosed spaces – rodent attacks, extraneous noise from a current crane or a starting fire, increased noise from it reference pump, compressor, refrigerator or air conditioner to prevent an accident.

In the field of emotion recognition, voice is the second most important source of emotional data after the face. The voice can be characterized by several parameters. Voice pitch is one such characteristic.

The voice of a typical adult man has a fundamental frequency from 85 to 155 Hz, (70-200 Hz) an average of 120 Hz, a typical adult woman from 165 to 255 Hz, (140 – 400 Hz), an average of 220 Hz, a child’s voice from 170 to 600 Hz, average frequency 320 Hz.

Emotions play a huge role in human life and interpersonal communication. They can be expressed in various ways: facial expressions, posture, motor reactions, voice and autonomic reactions (heart rate, blood pressure, respiratory rate). One of the most significant characteristics is an increase in the frequency and volume of the voice with emotional excitement (for example, speaking in a state of calm, exclamations when experiencing joy, or cursing with anger).

The model of J. Russell has a two-dimensional basis in which each emotion is characterized by a sign (valence) and intensity (arousal).

The analysis of speech signals does not allow to determine the sign of emotion – positive or negative, it is possible to determine only the intensity of the emotion: depression or arousal.

The norm will be considered a neutral state that occurs in humans in the absence of exposure to stimuli.

Thus, we dwell on three types of emotions: depression, (norm), arousal. These types of emotions are different from each other.

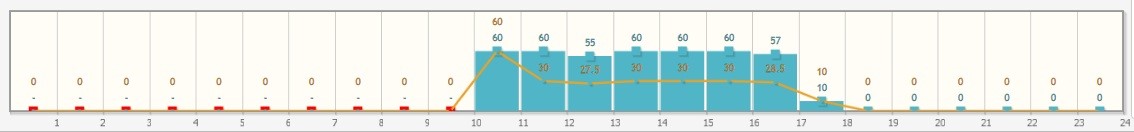

The audience is in satisfactory condition – calm, if the maximum falls on the frequency range 200..240 Hz (channel 1), or excited, if the maximum falls on the range 300..360 Hz (channel 2).

The third recording channel serves to confirm the shift of the frequency spectrum to the lower side – confirmation of channel 1 or upper side – confirmation of channel 2.

The need for automatic processing of speech signals is growing: the search for the necessary information in sound recordings manually is not only not effective, but simply impossible.

Thanks to the automated monitoring system BALANCE, you can get sound information for each object equipped with D100FC radio modules with three-channel acoustic sensors, and the number of objects is unlimited. The system is applicable for year-round monitoring of the sound spectrum and is convenient in that for the removal of sound information from all objects there is no need to visit these objects.

The current consumed by the radio module is not more than 1-2 mA, and a lithium battery of frame size A lasts from three to six months of operation.

It is possible to connect additional sensors for temperature, humidity and VOC content (volatile organic substances).

DJV-COM offers BALANCE hardware and software platform, and also seeks interested parties for cooperation.

The BALANCE mobile app can be downloaded here.

More information on the website www.djv-com.org, and we will be glad to hear recommendations and suggestions from you at office@djv-com.net